Not all search bots are able to read meta tags, and so this is where the robots.txt file comes into play. This simple text file contains instructions to search robots about a website. It is a way of communicating to web crawlers and other web robots about what content is allowed for public access and what parts are protected.

“Most major search engines (including Google, Bing and Yahoo) recognize and honor Robots.txt requests file.

“

What is robots.txt?

Why Should you Care About Robots.txt?

Robots.txt plays an important role. It informs search engines on how they can excellently crawl your website.

Utilizing the robots.txt file, you can stop search engines from accessing specific parts of your website, control duplicate content, and give search engines useful information on how they can crawl your website.

It would be best if you were careful while making modifications to your robots.txt, though: this file can make significant parts of your website unreachable for search engines.

Robots.txt Example

An instance of what an easy robots.txt file for a WordPress website may look like:

User-agent: *

Disallow: /wp-admin/

Let’s define the anatomy of a robots.txt file based on the above example:

User-agent: the user-agent means for which search engines the directions that follow.

*: this implies that the directives are for all search engines.

Disallow: this is a directive telling what content is not available to the user-agent.

/wp-admin/: this is the path that is unavailable for the user-agent.

This robots.txt file implies all search engines to stay away from the /wp-admin/ directory.

Disallow Directive in Robots.txt

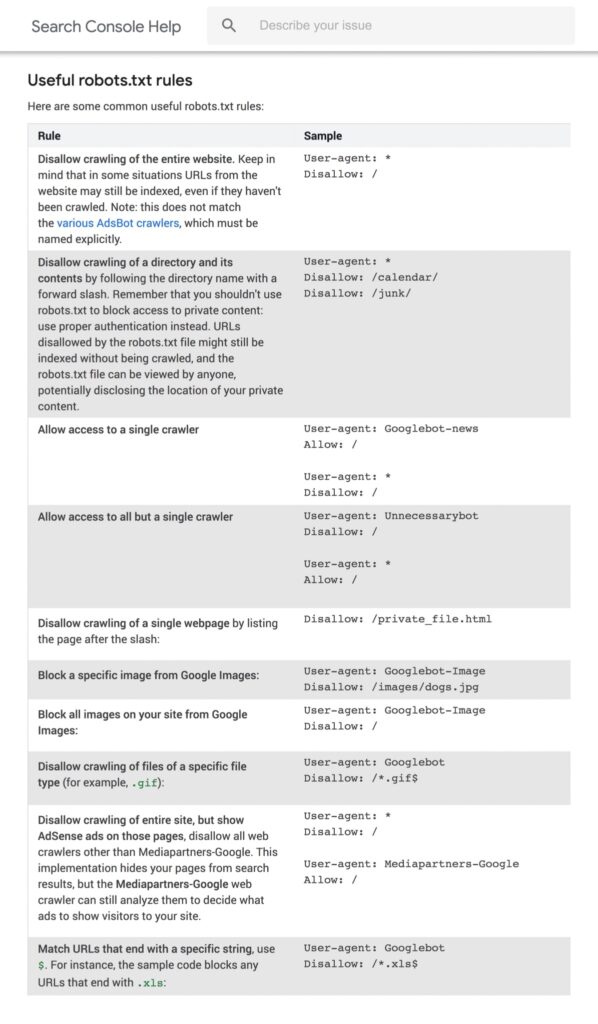

You can instruct search engines not to access individual files, pages, or parts of your website. This is done utilizing the Disallow directive. The Disallow directive is tracked by the path that should not be accessed. If no path is specified, the directive is omitted.

Example

User-agent: *

Disallow: /wp-admin/

In this all search engines are instructed not to access the /wp-admin/ directory.

Add Sitemap to Robots.txt

Even though the robots.txt file was created to inform search engines what pages not to crawl, the robots.txt file can even tell search engines to the XML sitemap. This is backed by Google, Bing, Yahoo, and Ask.

The XML sitemap should be referenced as a complete URL. The URL should not have the same host as the robots.txt file.

Connecting the XML sitemap in the robots.txt file is the acceptable practice we recommend you always to do, even though you may have added your XML sitemap in Google Search Console or Bing Webmaster Tools. Recall, there are more search engines out there.

Please note that it’s likely to reference numerous XML sitemaps in a robots.txt file.

Add Sitemap to Robots.txt

Robots.txt best practices are classified as follows:

Location and filename: The robots.txt file needs to be placed in the root of a website and have the filename robots.txt, for instance, https://www.example.com/robots.txt. The URL for the robots.txt file is case-sensitive.

Order of precedence: It’s essential to note that search engines control robots.txt files differently. By default, the first matching directive wins.

Yet, with Google and Bing, particularity wins. For instance, an Allow directive wins over a Disallow directive.

Only one directives group per robot: You can only specify one group of directives every search engine. Having numerous groups of directives for one search engine will confuse them.

Be specific: The Disallow directive starts partial matches as well. Be as specific as potential when defining the Disallow directive to stop unintentionally disallowing access to files.

Directives for all robots while also including directives for a particular robot: For a robot, only one directives group is valid. In case directives meant for all robots are tracked with directives for a distinct robot, only these particular directives will be considered. In the particular robot to also follow all robots’ directives, you need to repeat these directives for the specific robot.

Monitor your robots.txt file: It’s essential to monitor your robots.txt file for modifications. There are lots of problems where wrong directives and hasty changes to the robots.txt file cause important SEO issues.

Robots.txt Guide

Allow all robots access to everything.

There are numerous ways to inform search engines they can access all files:

User-agent: *

Disallow:

Or having a blank robots.txt file or not having any a robots.txt file.

Disallow all robots access to everything.

The example robots.txt down inform all search engines not to access the whole website:

User-agent: *

Disallow: /

Please note that just ONE additional character can make all the difference.

All Google bots don’t have access.

User-agent: Googlebot

Disallow: /

Please note that when disallowing Googlebot, this runs for all Google bots. That includes Google robots searching, for example, for news (Googlebot-news) and images (Googlebot-images).

All Google bots, except for Googlebot news, don’t have access.

User-agent: Googlebot

Disallow: /

User-agent: googlebot-news

Disallow:

Googlebot and Slurp don’t have any access.

User-agent: Slurp

User-agent: googlebot

Disallow: /

All robots don’t have access to two directories.

User-agent: *

Disallow: /admin/

Disallow: /private/

All robots don’t have access to one specific file.

User-agent: *

Disallow: /directory/some-pdf.pdf

Googlebot doesn’t have access to /admin/ and Slurp doesn’t have access to /private/

User-agent: googlebot

Disallow: /admin/

User-agent: Slurp

Disallow: /private/

Frequently Asked Questions About Robots.txt

For robots.txt files, Google supports a file size limit of 500 kibibytes (512 kilobytes). Any content above this limit file size may be omitted.

It’s unclear whether other search engines have a file size limit for robots.txt files.

Here’s an example of robots.txt content: User-agent: * Disallow: This tell-all crawler they can access the complete website.

If you assign a robots.txt to “Disallow all,” you’re basically describing all crawlers to keep away. No crawlers, including Google, are permitted access to your website. This implies they won’t be allowed to crawl, index, and rank your site. Because of this, your organic traffic will decrease.

If you assign a robots.txt to “Allow all,” you inform every crawler they can access every URL on the website. There are absolutely no rules of engagement. Please note that this is equal to having an empty robot.txt or having no robots.txt.

Search engines will respect the robots.txt; it’s always a directive and not a mandate.

robots.txt file is essential for SEO purposes. For larger websites, the robots.txt is necessary to give search engines clear instructions on what content not to access.